Hi everyone, hope you’re doing good.

On this blog post, I’ll discuss about DM Multipath and why this is so important when we are talking about Databases.

OK, let’s first understand about how the disks interacts with the databases and/or vice-versa.

If we think about Relational Databases, Database is basically an organized collection of structured information, or data, typically STORED electronic in a computer system.

Do you got the highlighted word? STORED.

Yes, a database must be stored in some place to be accessible. A database must be stored in a disk (or set of disks), so, you can read or write from/into your database, which basically will lead to physical reads/writes in the disk(s) where database is stored.

OK, I am assuming that if you are reading my blog, you already are a DBA, and of course you know that your Oracle Database is stored in disks.

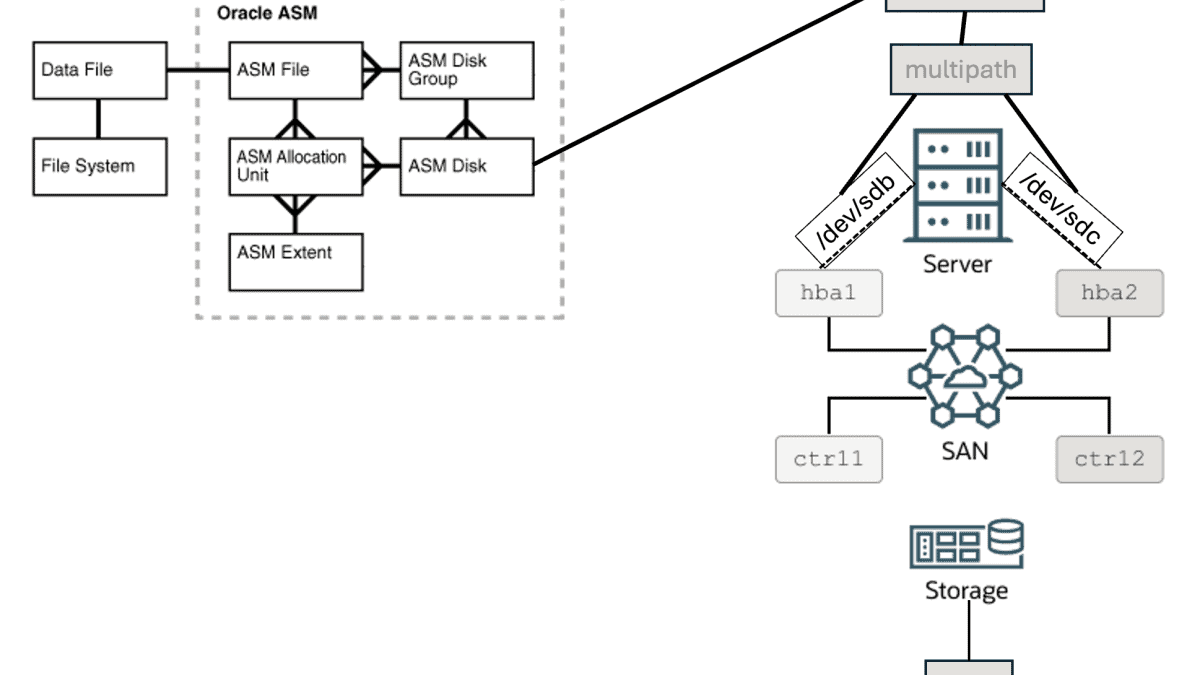

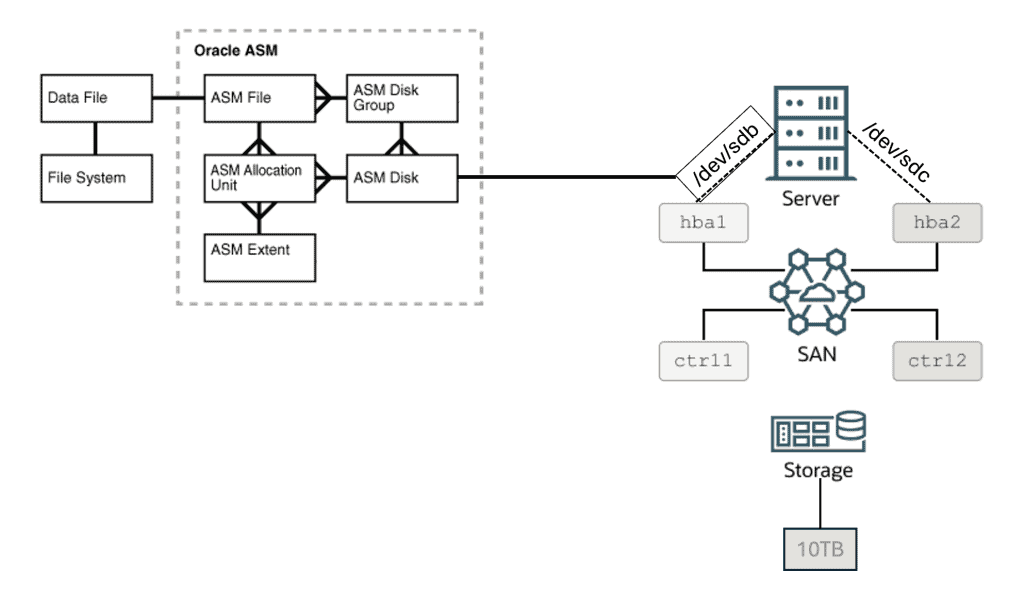

Below we have the Physical Storage Structures for Oracle ASM components with a small change that I made it.

You can read about the above image here:

So, in the above picture, we have one ASM disk, which is visible from OS side as /dev/sdb with 10TB of capacity. That’s good. Now, let’s imagine that this disk shown as /dev/sdb it’s not a local disk attached to the server. Instead, your disk came from the storage.

Let’s now see a high level of how the disk is presented from storage to DB server.

A storage is a solution that works a crucial role to store, manage and protect data. Basically is a system composed by a set of disks (and it can be from several types of disks: Hard Disk Drives (HDDs), Solid-State Drives (SSDs), Hybrid Drives (combined SSD and HDD), Enterprise SAS/SATA SSDs, NVMe SSDs, etc), storage controllers (which manages the storage media and facilitates the communication between storage system and devices accessing it), storage enclosure (where the storage media is housed and provide physical protection and connectivity to it), storage network infrastructure (this is how the storage will connect to other servers: Fibre Channel, iSCSI, etc), storage software (the software that will manage all the functionalities of the storage: data management, protection, snapshotting, replication, deduplication, etc), redundancy and high availability features (usually a storage have features such RAID, mirroring, clustering to ensure data availability and protect against hardware failures), among other components.

So, in a storage system we can have a plenty of capacity (we can even talk about exabytes of capacity).

Your storage is usually connected to a network (remember the storage network infrastructure), we call this as a SAN (Storage Area Network). This is a specialized network architecture that enables multiple servers or hosts to access shared storage resources efficiently and centrally. In a SAN environment, storage devices such as disk arrays, are connected to a dedicated network infrastructure, allowing servers to access storage resources as if they were locally attached. So, when you are working in a SAN environment, it’s common to hear the term “present the disk to server”, which means that you are going to release the access for some specific capacity (disk) to a specific server (or set of servers).

Let’s think how the disks are “presented” from storage to DB servers. Imagine we have a storage with 2 petabytes of capacity, this means 2,048 terabyes. We need to present a disk of 10TB to a database server. I could present a single 10TB disk from storage to DB server, but using a single disk will put us in a risk: if the disk fails? If this happens, disk will become unavailable, our data maybe will be lost. We must avoid this.

So, this is the reason that from the storage we have arrays of disks (and let’s assume here that we have all protection features properly configured). So, if we have to present a 10TB disk to DB server, we are not going to present a single disk, but a small portion of this array of disks. This small portion is usually called LUN (Logic Unit Number). Essentially, a LUN is a portion of the storage system’s capacity that is made available to the host for storage purposes, as a disk to be used as an ASM Disk.

A Storage also could have more than one storage controller (for redundancy). So, let’s imagine our storage have two storage controllers. Let’s also imagine that our DB server has two HBAs (Host Bus Adapter). HBA is a hardware component used in storage area networks (SANs) to connect servers or hosts to storage devices such as disk arrays. Usually, the DB server can have multiple HBAs, again, for redundancy.

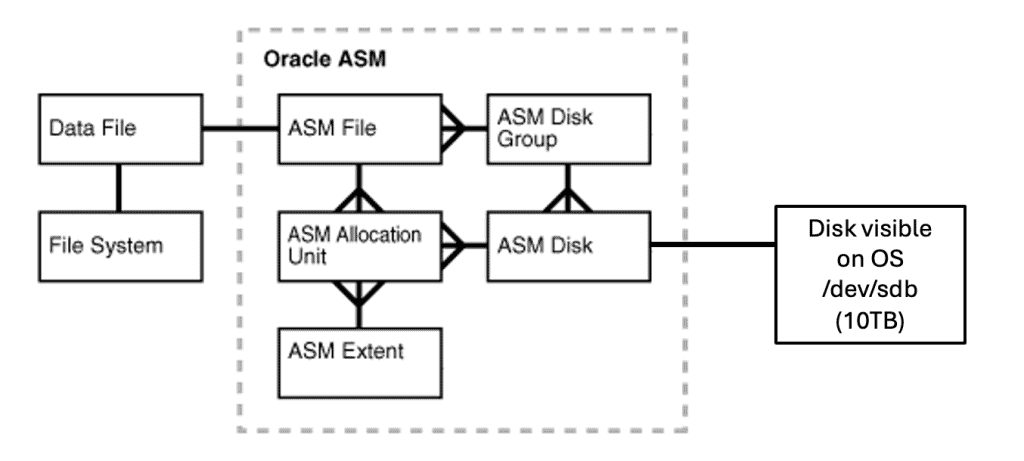

For the below scenario, let’s assume we are using a Linux system (RHEL/OEL).

With that said, let’s imagine that we are going to present a LUN of 10TB to the database server. Let’s imagine our SAN have 2 controllers and our DB server have 2 HBA’s.

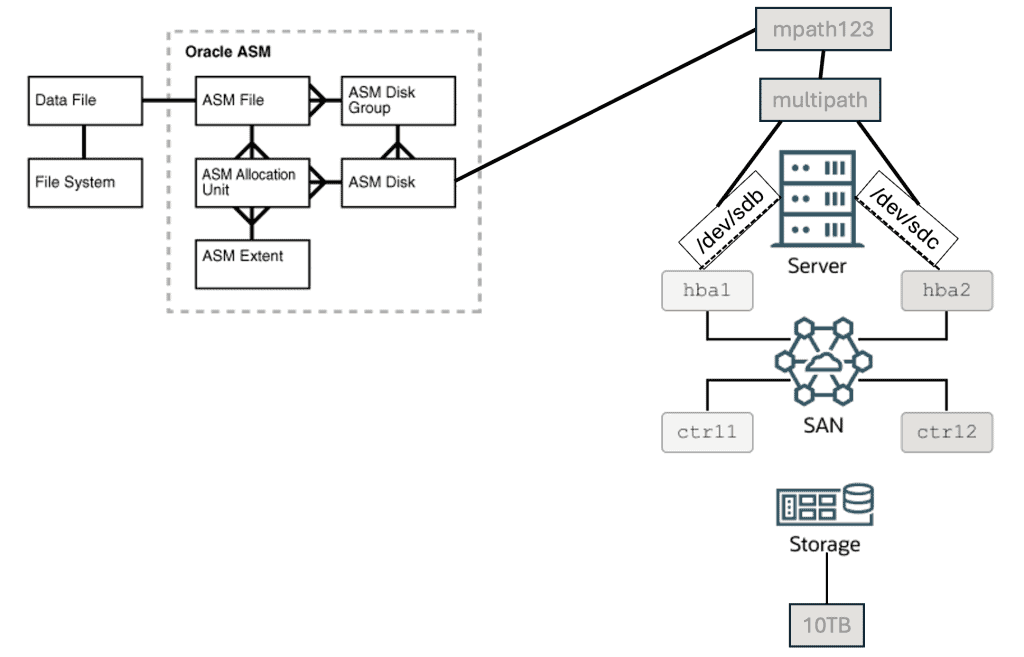

This means that this single LUN of 10TB can be visible as “two different” (or even more) disks from OS side. So, our 10TB LUN can be visible as /dev/sdb and /dev/sdc from Operating System. Each disk that is being visible from OS we call as “path”. Take a look at the below image:

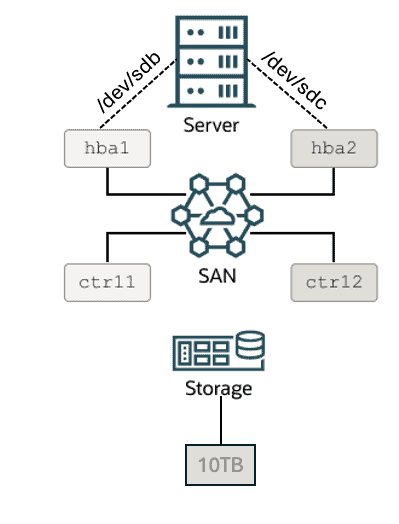

OK, now let’s “merge” our two pictures in only one:

I can use /dev/sdb as path for my disk and use it as ASM disk, it will work, no issues. But let’s imagine that for some reason, this path fails (maybe the storage controller fails, or my fibre channel, or the HBA from DB server). If I am using /dev/sdb and it fails, my disk will become unavailable to DB. Even that I still have the disk available from another path (/dev/sdc).

This is when Device Mapper Multipath comes in action. DM Multipath is a software component in Linux operating systems that provides path redundancy to storage devices, particularly in Storage Area Networks (SANs). It helps improving storage reliability, availability, and performance by managing multiple paths (or routes) between servers and storage arrays. So, we are receiving our 10TB LUN in two paths (/dev/sdb and /dev/sdc). I should not use the individual path as this can be a SPOF (Single Point of Failure). So, instead use the individual path, we can configure a software component that will provide path redundancy. So, in our example, if multipath is properly configured, our 10TB disk/LUN can be visible also as mpath123 (example).

When we have multipath, we can have several types of configuration, as such: active/passive, active/active, etc. In an active/passive configuration, I/O requests uses only one path. If the active path fails, Multipath will switch I/O to the passive (standby) path. This is the default configuration. In an active/active configuration, I/O requests are distributed across all available paths. An active/active configuration can potentially improve performance in storage environments by utilizing multiple paths simultaneously for data transfer because it will allow us to have an increased bandwidth, to load balance the I/O requests and to optimize resource utilization. Note that this “performance gain” it’s not related to time to read or write from/into disk. It’s basically related to bandwidth between storage and DB server.

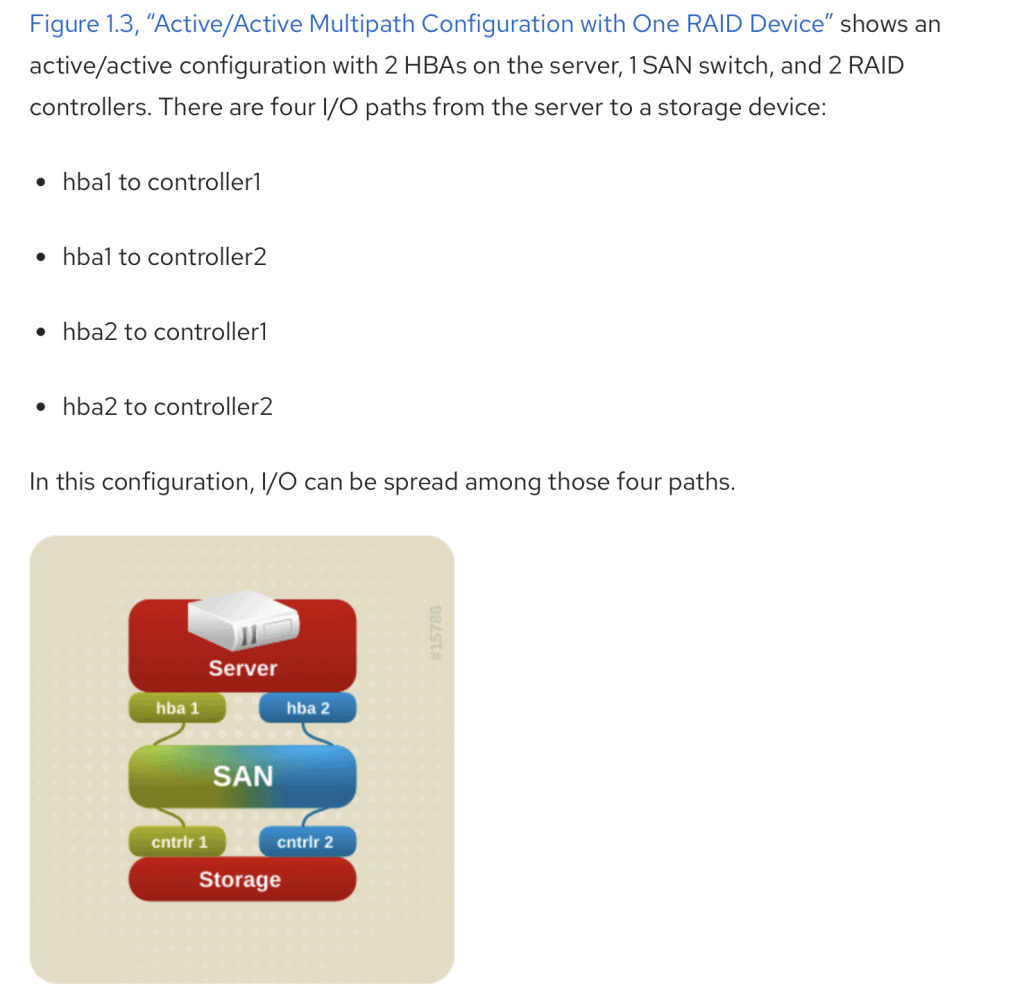

Edit: As told before, when we are using Multipath, we can have two or more paths. In our example, if we have two storage controllers and two HBA’s, the expected number of paths is 4 (four) paths.

Below image is from Red Hat documentation:

You can read more about the above image in the following link:

Now you can imagine why is so important to use Multipath, right? As DBA’s we need to make sure that our database is available, and choose and configure the right components in a properly way is too important than have a DB well administered.

Important to say that DM Multipath is a native software solution for Linux. But it depending of your storage solution, you can have vendor-specific multipath solutions (sometimes you also need to pay some license to use it):

- EMC PowerPath;

- NetApp Multipath I/O (MPIO);

- IBM MPIO for AIX;

- Many others.

When we are using ASM, ASM disks can be configured using udev, ASMLib or AFD in Linux systems. Please, make sure you are using multipath in the configuration for your ASM Disk.

Let’s assume that all multipath devices are listed as 3600* under /dev/mapper.

For AFD, this is how you can configure it:

cat /etc/afd.conf

afd_diskstring=’/dev/mapper/3600*

afd_filtering=enable

For ASMLib:

File /etc/sysconfiug/oracleasm is a symbolic link that points to /etc/sysconfig/oracleasm-_dev_oracleasm:

oracleasm -> /etc/sysconfig/oracleasm-_dev_oracleasm

I will show only the part that specifies to use multipath devices:

cat /etc/sysconfig/oracleasm-_dev_oracleasm

# ORACLEASM_SCANORDER: Matching patterns to order disk scanning

ORACLEASM_SCANORDER=”dm”

# ORACLEASM_SCANEXCLUDE: Matching patterns to exclude disks from scan

ORACLEASM_SCANEXCLUDE=”sd”

For UDEV, it depends of your Linux distribution and version, as example:

How To Setup Partitioned Linux Block Devices Using UDEV (Non-ASMLIB) And Assign Them To ASM? (Doc ID 1528148.1)

cat /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL==”xv*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”360a98000375331796a3f434a55354474″, NAME=”asmdisk1_udev_p1″, ACTION==”add|change”, OWNER=”grid”, GROUP=”asmadmin”, MODE=”0660″

Hope this blog post was useful for you.

In the next blog post I will show how we still faced some issues even with multipath properly configured.

Thank you!

Peace,

Vinicius

Related posts

About

Disclaimer

My postings reflect my own views and do not necessarily represent the views of my employer, Accenture.